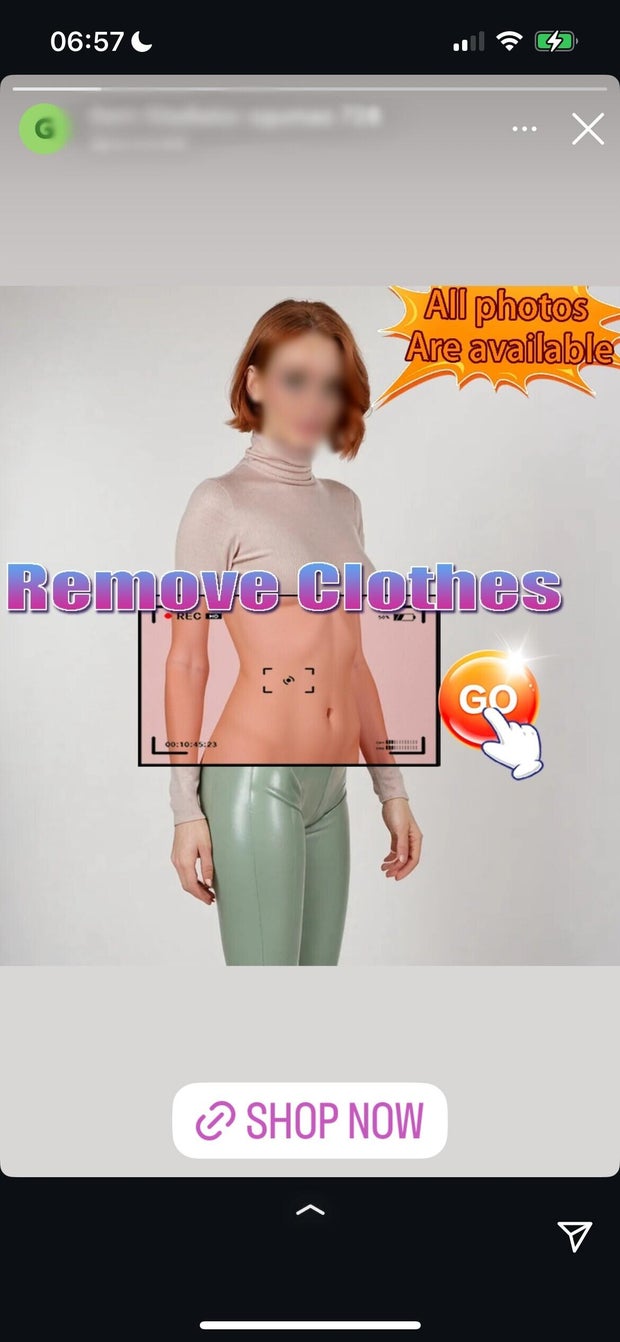

Meta removes many ads that promote "nude" apps - AI Tools for creating explicit deep hits Using Images of Real People – In the CBS News Survey, hundreds of such ads have been found on its platform.

A Messenger spokesman told CBS News in an emailed statement.

CBS News found dozens of these ads on Meta's Instagram platform and promoted AI tools in its "Stories" feature, which in many cases promoted the ability to "upload photos" and "see anyone naked." Other ads in Instagram stories promote the ability to upload and manipulate videos from real people. A promotional ad even read "How does this filter allow?" as text under an example Naked glue.

One ad promotes its AI products by using highly personalized, deep-watt images of underwear by Scarlett Johansson and Anne Hathaway. Some ads' URLs will be redirected to the website to promote the image of real people and enable them to perform sexual behavior. Some apps charge users between $20 and $80 to access these "exclusive" and "Advance" features. In other cases, the ad's URL redirects the user to Apple's App Store, where the "Nude" app can be downloaded.

An analysis of ads in the Meta ad library found that at least hundreds of ads are available on the company’s social media platform, including on Facebook, Instagram, Threads, Facebook Messenger Application and the Meta Audience Network, which allows Meta advertisers to access users on mobile apps and websites that work with the company.

Many of these ads are specifically targeted at men aged 18 to 65 and are active in the United States, the European Union and the United Kingdom, according to Meta's own advertising library data.

A meta spokesperson told CBS News that the spread of such AI-generated content is an ongoing problem and they face increasingly complex challenges in trying to combat it.

"The people behind these exploitative applications have been constantly developing strategies to evade detection, so we are constantly working to strengthen law enforcement," a meta spokesperson said.

CBS News found that even though Meta deleted the initially tagged ads, the company's Instagram platform still has ads that use "nude" DeepFake tools.

Deepfakes are manipulated images, recordings, or videos of real people who have been altered by artificial intelligence to distort what someone says or does what anyone actually doesn't say or do.

Last month, President Trump signed bipartisan law”Knockdown behavior“This requires the website and social media companies to delete Deepfake content within 48 hours of victim notification.

While the law makes it illegal to “intentionally publish” or threaten to post intimate images without consent, it is not a tool for creating such AI-generated content.

These tools do violate the platform security and temperance rules implemented by Apple and Meta on their respective platforms.

Meta's standard advertising policy says: "Advertisements must not include adult nudity and sexual activity. This includes nudity, descriptions of persons with clear or sexual hinting or sexually suggestive activities."

Under Meta's "bullying and harassment" policy, the company also bans "derogatory Photoshop or drawings" on its platform. The company said its regulations are intended to prevent users from sharing or threatening to share involuntary intimate images.

Apple's App Store guide explicitly states that "offensive, insensitive, frustrating, designed to be disgusting, unusually bad taste or just simple creepy content" is banned.

Alexios Mantzarlis, director of security, trust and security programs at Cornell Technology Research Center, has been studying the surge in AI DeepFake Networks marketing on social platforms. He told CBS News in a telephone interview Tuesday that during this period he saw thousands of these ads on metaplatforms and on platforms such as X and Telegram.

Despite Telegram and X's structural "illegality" he describes, he believes that despite content hosts, Meta's leadership lacks the will to address the issue.

"I do think the trust and security teams of these companies care about it. Frankly, I don't think they care at the highest point in the Metta case," he said. "Obviously, they have to fight these things in underresourced teams because they are as complex as these (dark) networks … they don't have the dime to invest."

Mantzarlis also said that in his research he found that the "naked" Deepfake Generator can be downloaded on Apple's App Store and Google's Play Store, which is frustrating that these huge platforms can't perform such content.

"The problem with apps is that they show this dual use aspect on the App Store, which is an interesting way, but then they marketed Meta because their main purpose is naked. So when these apps are reviewed on Apple or Google Store, they don't necessarily have the ability to ban them."

"If an app or website markets itself as a tool anywhere on the web, it requires cross-industry collaboration, then everyone else can say, 'Well, I don't care what you show on the platform, then you're gone," added Mantzarlis.

CBS News has stood out from Apple and Google to comment on how they regulate their respective platforms. No company responds during writing time.

The promotion of such apps by large tech companies raises serious questions about user consent and online safety for minors. CBS news analysis of a "nude" website promoted on Instagram shows that the site does not prompt any form of age verification before users upload photos to generate Deepfake images.

Such problems are common. In December, CBS News' 60 Minutes reported on one of the most popular sites using AI lacked age verification to produce nude photos of real people.

Although visitors are told that they must be at least 18 years of age to use this site and that “processing of minors is impossible”, once the user clicks “Accept” on the age warning prompt, uploaded photos can be accessed immediately without additional age verification.

The data also show that a high proportion of underage adolescents interacts with Deepfake content. A study conducted in March 2025 by Child Protection Nonprofit Thorne showed that among adolescents, 41% said they had heard the term “Deepfake Nudes”, while 10% reported knowing someone in person created a deep glue naked image of them.